[Deprecated] How to prevent a Planetscale database from going into Sleep Mode with the free Hobby Plan

Development

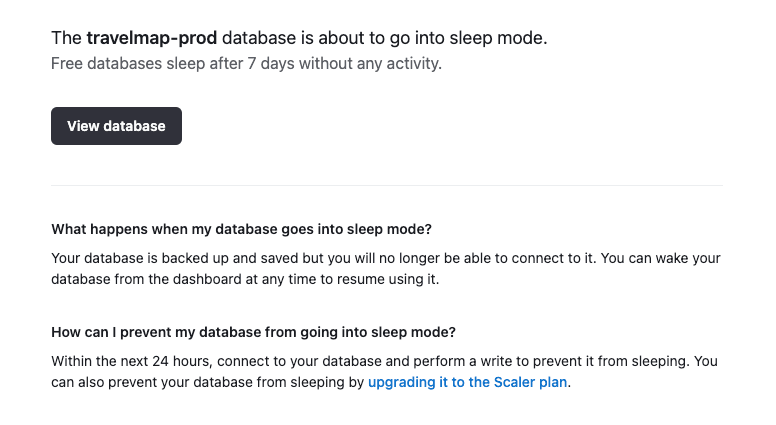

I became quickly frustrated after building a sample project with Planetscale. After a week without write queries, the database goes into sleep mode and one has to log in to Planetscale to wake it up. It’s part of the free plan, called Hobby, although they don’t really say it very explicitly in their plans page. I’ve seen this is becoming a common practice with other free product tiers as well.

So what can we do?

Automate! 🤖

Fortunately, we don’t need to spend hours to develop a solution. Some would be disappointed by this... I can empathize.

How to keep a Planetscale database awake with a cron job

Here’s the recipe:

Make a dummy table.

Make an endpoint, like

PUT/api/keep-alive, that performs a write operation to that table.

Implement a cron job to regularly hit the endpoint. I used GitHub Actions.

This way, your database will receive write queries regularly.

It also makes sense to protect that endpoint with a secret sent via custom header, like x-keep-alive-secret, so only you and the cron job can trigger execute it.

Step by step with a real project

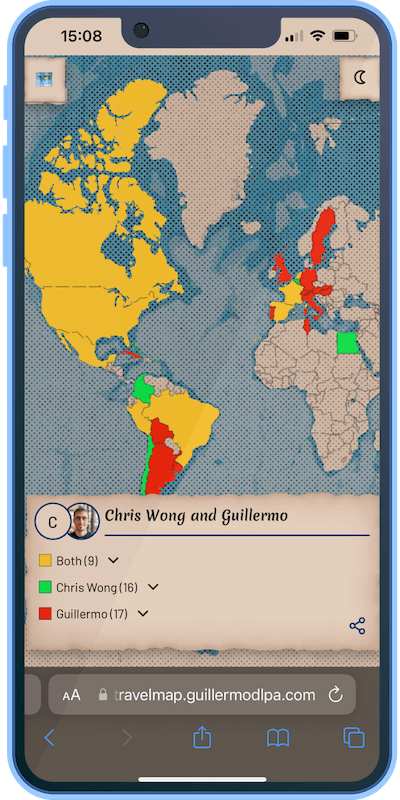

The project needing it was Travelmap. It’s a web app that allows users to highlight visited countries, and overlap their map with maps of other users. The frontend and API are developed with Next.js and hosted in Vercel.

Step 1: Creating the table in Planetscale

I created a table TravelMapCounts using a Planetscale branch.

Instead of making a dummy table without value, I figured it would be nice to watch the count of maps incrementing… over time… (am I naive, yes or yes?).

CREATE TABLE `TravelMapCounts` ( `id` varchar(191) NOT NULL, `individualTravelMapCount` int NOT NULL, `combinedTravelMapCount` int NOT NULL, `created` datetime(3) NOT NULL DEFAULT CURRENT_TIMESTAMP(3), PRIMARY KEY (`id`) ) ENGINE InnoDB, CHARSET utf8mb4, COLLATE utf8mb4_unicode_ci;

In any case, it would be enough with a table having only id and created, or simply id and counter to keep just one row.

Step 2: Implementing the endpoint

In my Next.js app, I created the route PUT /api/keep-alive. It counts all maps and inserts a new record.

// api/keep-alive.ts

export default async function handle(

req: NextApiRequest,

res: NextApiResponse

) {

// [...]

const prisma = getPrismaClient();

const individualCount = await prisma.individualTravelMap.count();

const combinedCount = await prisma.combinedTravelMap.count();

await prisma.travelMapCounts.create({

data: {

individualTravelMapCount: individualCount,

combinedTravelMapCount: combinedCount,

},

});

res.status(200).json({

individualTravelMapCount: individualCount,

combinedTravelMapCount: combinedCount,

});

});Step 3: Implementing The Cron Job in GitHub Actions

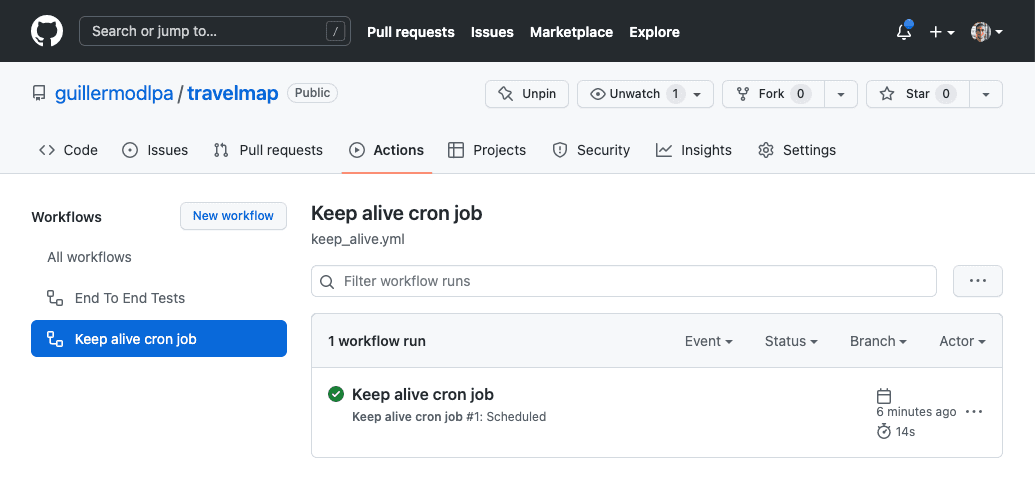

Since the codebase is in GitHub, I leveraged GitHub Actions to implement a scheduled workflow. In fact, Vercel’s docs pointed me there right away, as I was first checking out if Vercel featured cron jobs.

# .github/workflows/keep_alive.yml

name: Keep alive cron job

on:

schedule:

- cron: '0 12 * * *' # daily https://crontab.guru/#0_12_*_*_*

jobs:

cron:

runs-on: ubuntu-latest

steps:

- name: Call endpoint to keep the database alive

run: |

curl --request PUT \

--url '${{ secrets.SITE_URL }}/api/keep-alive'The workflow reads secrets.SITE_URL from the repository secrets that I configured in GitHub.

Step 4: (Optional) Protecting the endpoint with a secret

As I didn’t intend for anybody out there to be able to call the endpoint, I added a custom header check. The expected header value would be set as an environment variable in Vercel, and then read by the endpoint:

const keepAliveApiSecret = req.headers['x-keep-alive-api-secret'];

if (keepAliveApiSecret !== process.env.KEEP_ALIVE_API_SECRET) {

return res.status(403).json({ error: 'Unauthorized' });

}And then, I added the value to the repository secrets in GitHub and passed it on the curl in the workflow.

run: |

curl --request PUT \

--url '${{ secrets.SITE_URL }}/api/keep-alive' \

--header 'x-keep-alive-api-secret: ${{ secrets.KEEP_ALIVE_API_SECRET }}'Check out the workflow implementation here:

And the final endpoint here:

Step 5: Confirming it works!

Opening the Actions view at the scheduled time… voilà, I had the “keep alive” workflow running daily and ensuring my Planetscale free database never went into sleep mode.

As a summary, you can check out all the code changes in the following commit:

I hope this is helpful. For feedback and comments, don’t hesitate to reach out!