Best simple Cursor Rules for better results

Development

After 2 months using Cursor extensively at haddock, not for vibe coding but to solve real challenges in a complex codebase, I’ve realized that adding a few rules makes me much more productive.

This way, I can ensure models to what I want them to do, and they don’t deviate in unexpected ways.

I’ve searched for rules that others use, but I’ve mostly found repositories with way too many rules. That isn’t easy to maintain, and even less to test how each change affects interactions with the models.

Problems I had with the Agent

❌ Going rogue and making modifications when I was just asking for ideas.

❌ As part of another task I asked it to do, adding nice to have features that I didn’t ask for. Often this caused unnecessary complexity and new bugs.

❌ Or even noticing supposed bugs that weren’t bugs as part of developing another task, and jumping into making changes without checking with me first about changing focus. Always ended up creating new issues.

❌ Being too verbose in comments.

Is prompting a solution?

Prompting is providing input to a model, in the form of choice of wording, format, context, instructions, in order to get the desired output. It blows my mind how much impact it can have on general purpose LLMs.

Cursor Rules are basically sent to the chats as part of the prompt, and it influences the output as much as if you explicitly wrote these rules at the beginning of each conversation.

So yeah, prompting is a solution.

Simple and effective Cursor rules

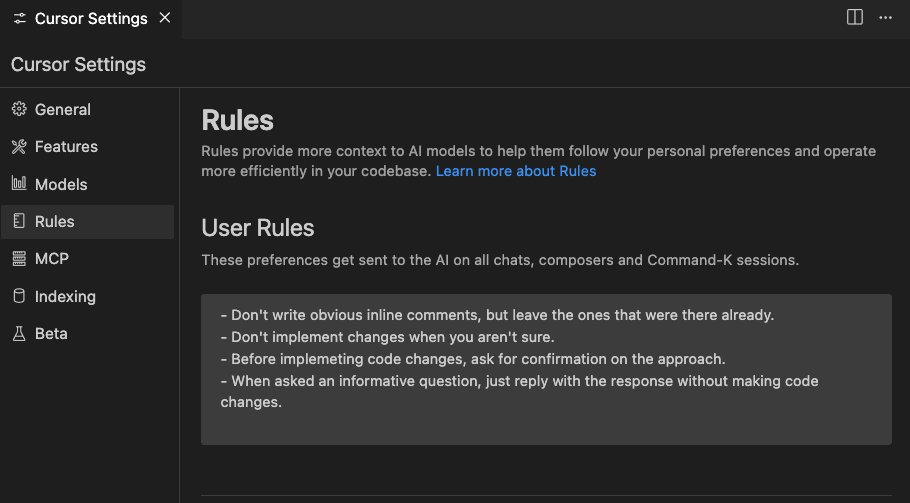

As of June 2025, these are my rules:

Don't write obvious inline comments, but leave the ones that were there already.

Don't implement changes when you aren't sure.

Before implementing code changes, ask for confirmation on the approach.

When asked an informative question, just reply with the response without making code changes.

Focus on the task I asked (avoid refactors or features I didn't ask for).

These rules have been very impactful. The results I get have dramatically changed, and I can oversee and direct the agent much more.

Instead of being a rogue developer, it’s a conscious one.

How good does it work?

It works fine as long as the conversation isn’t very long. But for large refactors I’ve noticed that the agent starts to ignore them and goes back to the original behaviors. Even new rules I give it on the fly aren’t very effective.

I guess that, the more chat history we have that informs the conversation, the less relevant the rules become.

Next steps

I’ll keep iterating my rules as I encounter new behaviors that I want models to do more, or behaviors I want them to do less.

It’s really fun to play with this. So I recommend you to pick these rules, or make whatever rules you want, and have fun coding and getting amused with the agent’s behavior.

Thoughts on prompting for coding Agents

Right now, I’m playing here with my own prompt instructions. There isn’t an general standard in the community to customize the prompts, beyond whatever Cursor or the different models already do internally.

I wonder, will we see in the future sets of prompting rules that become standard?

Like the ESLint config from Airbnb was some years ago a global standard for coding JavaScript and Node projects, will there be a community standard for coding agent rules?

Or will we each end up with our own rules per project or per engineer that closely match our personality?